As new technologies advance, a plethora of products and services are being designed to respond to new needs. In the future, having digital humans as assistants, performers, clerks, coworkers — and perhaps friends too — might become the norm. In this article, we will look at how new and older technologies are being used to create holograms, digital humans, and, finally, deep fakes — reflecting on the possibilities they might bring in the future.

HOLOGRAMS

You might have watched the Black Mirror episode where (spoiler alert) pop star Ashley O — played by Miley Cirus — is “recreated” in the form of an euphoric doll-speaker and, later, when she’s kept in a coma, in the form of a hologram performing songs extracted from her brain waves. The episode makes the viewer reflect on many themes; one being that the artificial constructions of a person may not represent their real personality at all — despite them having the same looks and mannerism— and another being that such artificial constructs might be created without the real person’s consent.

Holograms aren’t only found in dystopian sci-fi series, they have already been used for a while to replace people who are unable to attend events and even bring dead people “back”.

The risk of falling into the Uncanny Valley

A recent example of an hologram being used to substitute a person is to find in K-Pop group BTS’s performance at the 2020 Mnet Asian Music Awards. One member of the group couldn’t participate because of an injury. So, the group was joined on stage by the hologram of the missing member, allowing the seven to perform together.

Some fans reacted strongly to the projection — made by Vive Studios — asserting that the hologram was… a bit too realistic.

Bringing dead artists back

Many singers and songwriters who have passed away have been brought back with holograms…or almost. In fact, a hologram is by definition a 3D projection of diffracted light that retains the depth of the original physical entity that it’s showing. The holograms used to allow dead artists to get back on stage have their roots in a bicentennial technique called Pepper’s Ghost. This method uses a reflective glass surface that is angled towards a booth located underneath the stage. The image of the actors in the booth are projected on stage, through the glass, looking translucid. This effect gives the illusion that the projection is 3D, when it’s in reality bidimensional.

2Pac’s hologram, which was shown at Coachella and went viral in 2012, was created using an adapted version of this technique. The new technique, invented by Musion Eyline Projections, utilises a thin sheet of mylar to project the digital versions of real people.

What we know as holograms today are basically a combination of VFX special effects and the Pepper’s Ghost. This technique was also used in 2014 to allow Michael Jackson to moonwalk to one of his songs released after his death, and opera singer Maria Callas to perform in 2018, 41 years after her death. Even Whitney Houston’s hologram, produced by UK-based company Base Hologram, was planning to go on tour, starting from April 2020, but the pandemic outbreak forced the tour to be postponed.

Even though holograms enable us to create fantastic experiences, giving new life to people who have passed away, they constitute an ethical dilemma; should we be projecting hologram performances of deceased people without having obtained their consent whilst they were alive?

Base Hologram admitted that the Amy Winehouse hologram tour, which they planned and eventually postponed, encountered ‘unique challenges and sensitivities’. The ethical discussion on whether to make the posthumous hologram concert happen is still taking place today.

Fighting against animal cruelty with holograms

If creating holographic humans leads to fundamental ethical dilemmas, replacing animals with beautiful holograms could help fight against animal cruelty. German Circus Roncalli ditched flesh and blood animals for holographic ones, including elephants, horses, and lions. This was possible thanks to a collaboration between the Circus and Bluebox, which used Optoma projectors to make the cruelty free circus come to life.

The hologram you can feel and hear

Researchers at the School of Engineering and Informatics of the University of Sussex built a tactile hologram, a 3D projection that involves a light, floating material being manipulated using ultrasound waves. These 3D projections can be touched and emit sounds. The system, called Multimodal Acoustic Trap Display (MATD), uses an LED projector, a speaker array, and a foam bead. The speakers emit ultrasound waves that suspend the bead in the air and rapidly move it, allowing it to create illusions as it reflects light from the projector.

These illusions, called volumetric images, resemble holograms, but, unlike them, their shapes can be seen from all angles. They can also be felt; even if inaudible, ultrasound is still a mechanical wave and carries energy through the air. This type of hologram only produces relatively simple shapes, but perhaps in the future it will be possible to create human-like figures. This would lead to unimaginable possibilities. What would you do if you could hear and feel the hologram of your friend or family member?

Real-time holograms

Evercoast is a computer vision and 3D sensing software company that can turn people into holograms in real time, using 5G. The company’s mission is to shift how people communicate from 2D to 3D. This technology can give life to new shopping experiences, impact corporate training and more. For example, you could experience the dressing room of the future, send a stream to friends, ask for feedback in real time, communicate with your holographic boss or visit your 3D physician. This type of 3D content could be displayed on TV monitors, glass cube displays, via a mobile phone or even AR headset.

DIGITAL HUMANS

Digital humans, unlike holograms, aren’t a close copy and replacement of a real person; instead, they exist independently, with their own personality and, in many cases, with an incredibly realistic appearance.

Interactivity at the expense of realism

Hatsune Miku is a blue-haired teenage pop star from Sapporo, Japan. Despite her looks resemble a manga more than a real human, she still managed to become a pop singer hosting concerts around the world. Hatsune is, in reality, a vocaloid software voicebank developed by Crypton Future Media.

Some people have also attempted to bring virtual humans to life by animating them through mocapping. You can see an example of Miko, an interactive V-streamer, being mocapped live by a technician using facial tracking.

Realism at the expense of interactivity

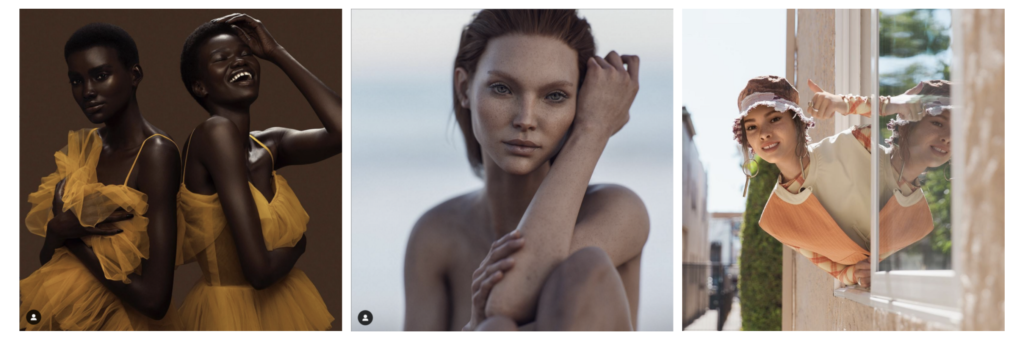

If Hatsune and Miko don’t look real, Instagram models like Shudu — the world’s very first digital supermodel — Dagny, Miquela and many others, surely have more lifelike, realistic features. However, they neither move independently nor interact with users.

AUTONOMOUS DIGITAL HUMANS

Even if characters like Miko can talk, move and interact, they are not autonomous. Luckily, some companies have developed realistic digital humans who can talk, hold a conversation, think, and move naturally.

Digital humans who can have fluid conversations

Douglas, is a realistic real time autonomous digital human. Currently in development, his job is to break down the barriers in human-to-machine interactions, yielding conversations that feel natural. Douglas uses numerous types of machine learning and digital domain R&D to reproduce the most common mannerisms people expect from a life-like human. He can lead conversations and remember people, and because his response rate is the same as Alexa and Siri, the conversations he can have with people flow naturally, without the long pauses that typically slow down other autonomous digital humans.

Douglas can switch faces, allowing customers with personalisation when it enters the market in 2021. Companies could soon expand past voice-only interactions, and, therefore, photorealistic digital humans that behave like us, such as Douglas, could become part of our society.

Digital humans with a virtual nervous system

New Zealand startup Soul Machines created Lia, an emotionally intelligent “artificial human”, with complex facial expressions and skin imperfections that make it hard to tell she is digital.

Lia has a virtual brain, a virtual nervous system, and digital versions of dopamine and oxytocin that affect her neurons and trigger her facial muscles. She can read your face, detecting your emotional state, and can learn and respond to external stimuli in real time.

Lia isn’ the only digital human Soul Machines created and can create. In fact, it is possible to change the digital person’s age, ethnicity, and gender through a digital DNA, based on real people. Soul Machines’ idea is that in the near future we will engage with a digital person like her to get technical or customer support, book a holiday, get a medical consultation, find the ideal credit card, etc. Companies like Daimler and Royal Bank of Scotland are already using Soul Machines.

DEEP FAKES

Deep fakes are videos or audios created using AI. Started as a very simple face swapping technology, they have now become film-level CGI.

AI might not be able to protect us from the potentially disruptive effects of deep fakes in society

Deep fake technology can bring a number benefits with it, but it can also introduce harms that are hard to fight. With deep fakes, we can make people say and do things they never said or did. Plus, today, deep fakes are becoming cheaper to make, more realistic and increasingly resistant to detection. Therefore, they could worsen problems related to authenticity and truth — on top of the huge amount of fake news that is already circulating on the web. Deep fakes could cause individuals and businesses to face new forms of exploitation, intimidation, and personal sabotage.

Zoom calls with deep fakes

An example that shows how easily and realistically deep fakes can be created is Take This Lollipop 2; a “haunted” Zoom call where looming figures appear in the background and deep fakes replace real callers saying things the real callers never said. This project brings up a cybersecurity concern, highlighting how cutting-edge deep fakes can spread misinformation in ways never seen before.

AI can create deep fake nudes in seconds

Another astonishing example is DeepNude, a software appeared in 2019 used to generate fake nude images. To generate fake nudes, DeepNude uses an AI technique called Generative Adversarial Networks, with the resulting images varying in quality. Most look fake, with smeared or pixellated flesh, but some can easily be mistaken for real pictures. The website was taken down after receiving mainstream press coverage, which expressed concerns about people misusing the software or deploying it for revenge porn.

Despite this, the software has continued to spread over backchannels — open source repositories and torrenting websites — and it’s now being used to power Telegram bots, which handle payments automatically to generate revenue for their creators. Telegram deep fake bots have been used to create more than 100.000 fake nudes. Using images taken from social media, the fake nudes, once created, are shared and traded by users across different Telegram channels. The bots are free to use, but they produce fake nudes with watermarks or partial nudity. To “uncover” the pictures completely, users only need to pay a very small fee — only a few USD cents.