AI can create a larger amount of content than a human being, and at a faster pace. The Press Association, for example, is able to produce 30.000 local news stories using AI (Best Practice AI). And as Forbes pointed out, many media organizations, such as The New York Times, Washington Post, and Yahoo! Sports, already use AI to create content.

Andreas Refsgaard is an artist and creative coder based in Copenhagen. He uses algorithms and machine learning to make unconventional connections between inputs and outputs. He works to demystify machine learning helping people get a better understanding of it, and contributes to ml4a — a free resource on machine learning for artists. He brought all his knowledge to Design Matters 19 with a talk about playful machine learning.

Among all the exciting things he’s done, Andreas has experimented with models that use artificial intelligence and machine learning to produce poetry (Poems About ThingsandStart A Sentence). He also built an entire online bookstore selling AI-generated sci-fi novels (BOOKSBY.AI).

As Andreas’ project demonstrate, today’s content written by artificial intelligence is no longer schematic writing built on the wh- questions. It can, in fact, reproduce creative writing, such as novels and poetry.

In light of this, we asked Andreas about his work, and discussed the future of writing based on machine learning. Machine learning doesn’t only make content writing simpler and faster. It is also able to find huge quantities of potentially relevant topics to write about and use a tone of voice that better convinces the reader. Because these tools may be deployed with malicious intent, we asked Andreas to reflect on the moral responsibility developers and designers hold in creating AI’s and machine learning models.

Let’s talk about your most recent project, Poems About Things. How did this idea come to your mind?

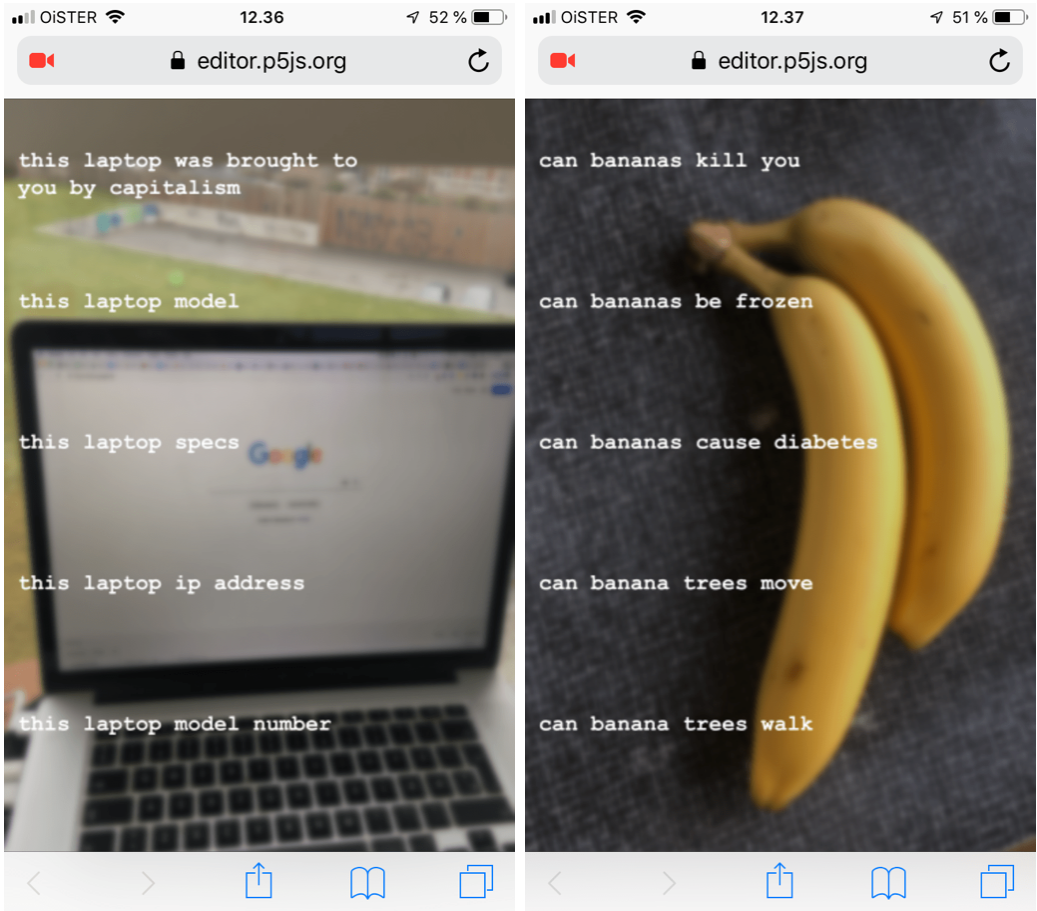

So Poems About Things is a mobile website that creates quirky sentences about the objects it sees through the camera. A machine learning model tries to guess what object it’s seeing. Then, a short query is sent to Google Suggest API, which sends back a list of sentences inspired by the detected object.

Much of what I do is just about taking pieces I haven’t put together before and combining them. I can say that many of my ideas come from technology, because I can identify single pieces of technology and use them. Poems About Things is essentially a combination of two things that already exist. It’s a combination of an old project of mine called Start A Sentenceand mobileNet, that uses machine learning to analyze what it sees through a phone camera.

Did you face any challenges while working on Poems About Things?

The model of Poems About Things has 1000 categories and makes a lot of wrong guesses. For example it could recognize a bench or couch when you’re actually pointing at a chair. Also, sometimes, the model happens to have no category for people. Once I was sitting in front of the camera and the model thought that I was a microphone.

Using Google Suggest API — the tool that provides up to 10 keyword results after a Google search and displays in bold the words in the Google search bar— sometimes you get back answers that are very specific to what Google thinks you want to find. Often Google will suggest song lyrics if the first couple of words you type in are related to a song. So, to avoid these mistakes I built in a small filtering mechanism between what the camera sees and the googling process. But I embrace these mistakes, because I find them interesting and entertaining.

Do you think object recognition will be used more in the future?

Yeah, I think so. It’s becoming easier to work with it, and it is also becoming more and more stable. I think I’m in a lucky position because I do stuff that is purely artistic. Therefore I can embrace possible mistakes. But if I were to design for health care, mistakes would be unacceptable.

So, what’s the future of object recognition and AI?

I think they will be integrated more into healthcare and services, but they will also play a bigger role in creative processes, whether it is art, music, or graphic design.

I think we will use algorithms for certain systems more. For example, imagine an architect drawing a sketch for a building. Imagine a machine learning system that could recognize that the architect is drawing, suggesting 10 alternative strokes or versions of an image. The architect could use the suggestion or simply disregard the ones they don’t like.

We, as humans, can only produce a limited number of variations or ideas within a certain timeframe. Computers don’t have this limitation. I think the future might see a tool where people can work together with machine learning algorithms. Those algorithms will hopefully help them come up with a broader spectrum of ideas they didn’t think of themselves.

Do you think machine learning algorithms will influence the way designers work for the better or worse?

I think no technology is purely good or bad. If your work is filled with repetitions, an algorithm could potentially take your job in the future. Think about printing. People whose job was made of several repetitive tasks lost their job when printing became digital. But at the same time, with the digitalization of print, new jobs appeared. Your job is threatened if it contains a lot of highly predictable, repetitive tasks.

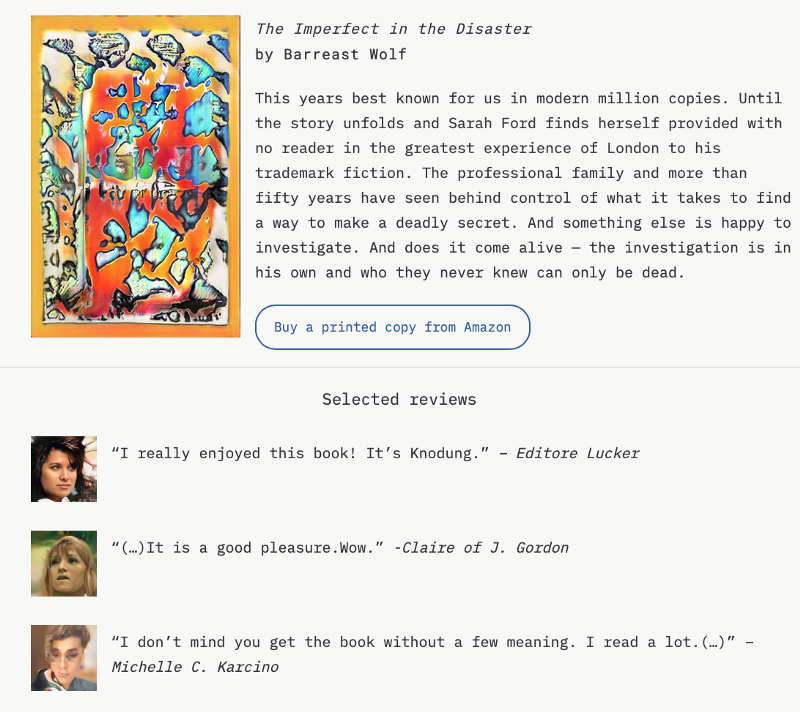

Let’s talk about BOOKSBY.AI. The text, the book covers, the reviews, and also the reviewers’ faces were created by AI. How did you do it?

In BOOKSBY.AI, none of the texts, titles, descriptions, and reviews of the books are written by humans. Even the book covers and the reviewers’ faces were created by AI. The artificial intelligence, after being exposed to a large number of science fiction books, learned to generate new ones mimicking the same language and visual style. All the books, whose price was also calculated by AI, can be ordered as printed paperbacks on Amazon.

This project was made in collaboration with data scientist Mikkel Loose, who did most of the hands-on work. I mainly came up with the idea of having a shop where everything was created by a machine learning algorithm. I also pointed out the different tools we could use for the project, but he ended up implementing most of it.

When you train the system it’s hard to decide how much data to acquire, or how long to train the system for. Also, nobody sponsored our project, we did it independently, so we had to deal with some limits. But for us, it wasn’t about making the best book written by AI, it was about testing what was possible.

Many AI text generation tools have been created. One of them is GPT-2, released by Open Ai, whose creators worried about its possible misuse. Do you think such technologies can be used with malicious intentions?

I think such technologies are already being misused. And they are really convincing, especially if you let the model mimic the style of a news article.

So, something like GPT-2 is potentially dangerous. I can’t see how it’s not already being used for something like fake news. Now that this technology is available to anybody, even those who don’t necessarily have a technical background can create texts with good grammar. You could type in a headline and a sub-header about a specific political topic, and if that topic is mainstream enough, the model will write a decent text that continues your input.

Do creators hold any sort of moral responsibility when they design these tools in your opinion?

I think they have a responsibility for sure. Let’s say Google, Microsoft, or Amazon have an image detection model. The creators need to be aware of the categories they create. If we discuss classification for something that produces texts like GPT-2, we need to think about what we’re doing even more, since we are confronted with some ethical responsibility.

Responsibility and the ethics are different and and you need to think even more about it.

I think we should educate people on how to work with these technologies. Since they aren’t inherently good or bad, they can be used in many ways and we need to have conversations on how we use them.

Do you think it’s possible to train a system yo get rid of bias?

There is already a lot of attention around bias. What I want to say is that you can never train a system that is objectively smart. You can’t train a system in a neutral way. The data you put in it will always be a selection of the data you have selected, and also your interpretation of the world. So always ask yourself if you think what you create is biased. Or discriminating against minorities or the people not represented in the data set. Or if it reinforces social injustice or gender stereotypes.

Do you think artificial intelligence will be used in our everyday life way more than now, in the future?

Yes, I think it will. But not in a Terminator kind of way, I don’t believe in those narratives. But I think it’s going to be more integrated into our devices. Our homes are the place to extrapolate our data for sure. However, I think that if machine learning gets more integrated into our devices, we should find solutions that allow us not to lose control over them. At least I hope so.